Best practices | TestCases | Trial runs

Before you start running tests in ExecutionLists, we recommend that you first do some trial runs of your TestCases in the ScratchBook.

The ScratchBook doesn't store results permanently, which makes it an excellent playground to find and fix instabilities in your tests. For example, identification issues, missing wait times for dependent controls, or pop-up dialogs that you didn't consider when you designed your test flow.

Make your TestCases as stable and reliable as you can. Once you're in the test execution phase, you don't want to spend valuable time figuring out whether a failed result points to a flaw in your application or in your test. Plus, ExecutionLists store results permanently. In heavily regulated environments where you have to keep a record of all test results, experimenting with TestCases in ExecutionLists muddles up your results record.

This topic takes you through testing your tests:

-

How to make results easier to analyze.

-

How to handle issues in your tests.

Make results easier to analyze

Here are a few things that make analyzing results much easier:

-

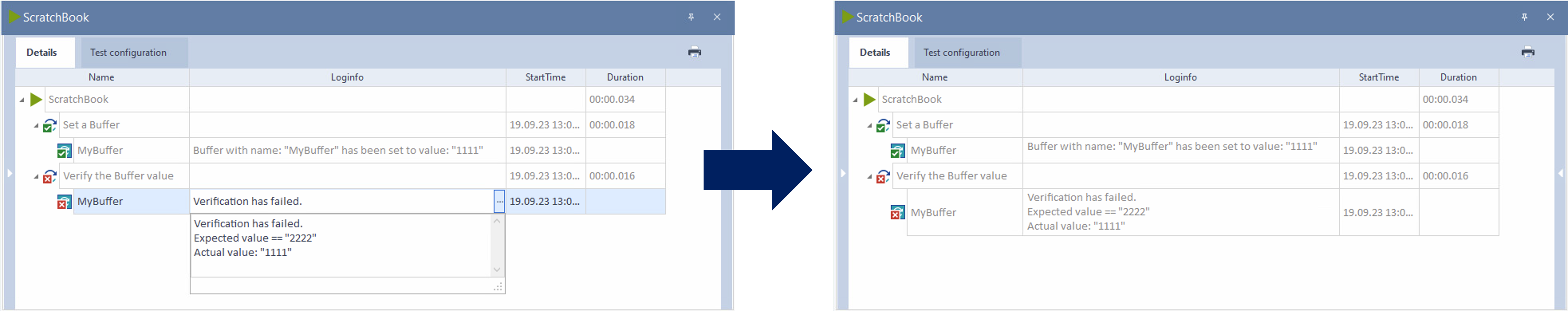

To show more than one line in the LogInfo column, change the results view and enable Show multiline logs.

Results in the ScratchBook: default view → with Show multiline logs activated

-

To take a screenshot of your application whenever a graphical user interface TestStep fails, enable the setting Make screenshot on failed test steps.

When you run tests in the ScratchBook, you can view the screenshot in the storage location you defined in the setting. When you run tests in ExecutionLists, Tosca adds the screenshot to the test results.

-

To review your tests in action and identify areas for improvement, video record your graphical user interface tests. Please note that this is still an early access feature.

Handle issues in your tests

If your TestCase fails, expand the failed TestStep and check the LogInfo column for details.

Is it a steering issue?

If Tosca can't find or steer a screen or control, check your application. Alternatively, if you've enabled the setting Make screenshot on failed test steps, check the screenshot.

-

Does your screen look as expected at this particular point in the test?

Check your Module, especially the identifiers you chose for your screen or control. You may need to update the Module. For example, rescan the Module to choose better identifiers, or update identifiers after a code change in your application.

-

Are there any unexpected elements on your screen?

Check for behaviors that you may have missed during test design, for example confirmation dialogs or pop-ups that block the user interface. Ideally, you adapt your TestCase to handle this behavior. If the behavior is sporadic, you may need to set up recovery procedures instead.

Is it a verification issue?

Compare the expected result and the actual result in the LogInfo column. Also, if you've enabled the setting Make screenshot on failed test steps, check the screenshot.

-

Are you verifying the right control?

Check your TestCase to make sure you're verifying the right control. That's especially true for tables, where it's easy to accidentally define the wrong cell. You may need to update your TestCase.

-

Is it a legitimate verification issue? In other words: you're definitely verifying the right control, and there's a difference between your TestCase and the application.

Check your TestCase and your application to figure out which one is wrong. You may have to go through both, step by step, to find the source of the issue.

If the verification issue comes from a bug in your application, make sure to set up error handling. Don't worry, even with error handling, Tosca still reports the verification failure. As long as the bug exists, you'll know. Error handling just gets your test run back on track, so you still get meaningful results for the rest of your ExecutionList.

Let's say your TestCase adds five products to the cart of your webshop. You're running your tests in the ScratchBook, and Tosca reports a verification issue with the total price. During your investigation, you realize that one product has a bug: your application doesn't always calculate the right tax at first try. In this case, you could create a Recovery Scenario that removes the product from the cart and then adds it again.

![]() Need a hand with

Need a hand with

Is it a timeout issue?

If Tosca times out on screens or controls, check your application. Alternatively, if you've enabled the setting Make screenshot on failed test steps, check the screenshot.

-

Are all controls active that you expect at this particular point in the test?

Check your application for dependencies between controls. You may need to add a dynamic wait time, so Tosca waits until a specific control becomes available. For example, if a progress bar has to reach 100% before the Next button activates.

-

Did your application take longer to load than expected?

Check with your QA team. If the screen or control really shouldn't take this long to load, leave your TestCase as it is. That way, you get the error until your application is fixed.

If you're in the earlier stages of development where you expect your application to be slow, adjust your TestCase. You can increase the default time that Tosca waits for all screens and controls, or you can add wait times for specific controls or TestCases. That way, you don't get failed tests for expected timeouts.

For detailed information on wait times and important considerations, check out "Best practices | TestCases | Wait times".

What's next

If you haven't yet, check out our other best practices articles.